Over 400 million tweets are posted every day. But accessing 100% of these tweets (say for disaster response purposes) requires access to Twitter’s “Firehose”. The latter, however, can be prohibitively expensive and also requires serious infrastructure to manage. This explains why many (all?) of us in the Crisis Computing & Humanitarian Technology space use Twitter’s “Streaming API” instead. But how representative are tweets sampled through the API vis-a-vis overall activity on Twitter? This is important question is posed and answered in this new study using Syria as a case study.

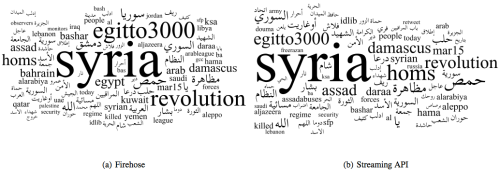

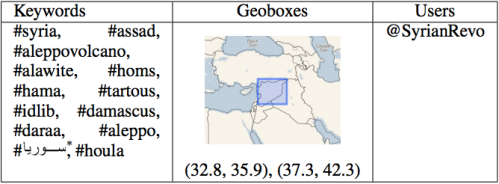

The analysis focused on “Tweets collected in the region around Syria during the period from December 14, 2011 to January 10, 2012.” The first dataset was collected using Firehose access while the second was sampled from the API. The tag clouds above (click to enlarge) displays the most frequent top terms found in each dataset. The hashtags and geoboxes used for the data collection are listed in the table below.

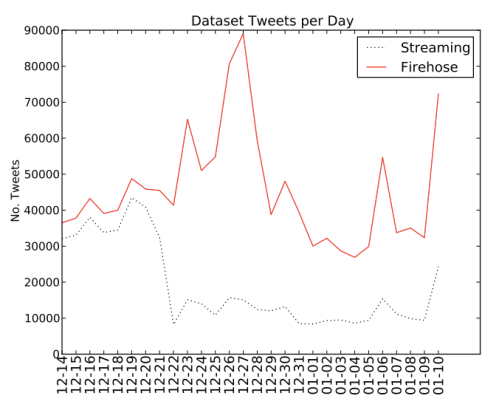

The graph below shows the number of tweets collected between December 14th, 2011 and January 10th, 2012. This amounted 528,592 tweets from the API and 1,280,344 tweets from the Firehose. On average, the API captures 43.5% of tweets available on the Firehose. “One of the more interesting results in this dataset is that as the data in the Firehose spikes, the Streaming API coverage is reduced. One possible explanation for this phenomenon could be that due to the Western holidays observed at this time, activity on Twitter may have reduced causing the 1% threshold to go down.”

The authors, Fred Morstatter, Jürgen Pfeffer, Huan Liu and Kathleen Carley, also carry out hashtag analysis using each dataset. “Here we see mixed results at small values of n [top hashtags], indicating that the Streaming data may not be good for finding the top hashtags. At larger values of n, we see that the Streaming API does a better job of estimating the top hashtags in the Firehose data.” In addition, the analysis reveals that the “Streaming API data does not consistently find the top hashtags, in some cases revealing reverse correlation with the Firehose data […]. This could be indicative of a filtering process in Twitter’s Streaming API which causes a misrepresentation of top hashtags in the data.”

In terms of social network analysis, the the authors were able to show that “50% to 60% of the top 100 key-players [can be identified] when creating the networks based on one day of Streaming API data.” Aggregating more days’ worth of data “can increase the accuracy substantially. For network level measures, first in-depth analysis revealed interesting correlation between network centralization indexes and the proportion of data covered by the Streaming API.”

Finally, study also compares the geolocation of tweets. More specifically, the authors assess how the “geographic distribution of the geolocated tweets is affected by the sampling performed by the Streaming API. The number of geotagged tweets is low, with only 16,739 geotagged tweets in the Streaming data (3.17%) and 18,579 in the Firehose data (1.45%).” Still, the authors find that “despite the difference in tweets collected on the whole we get 90.10% coverage of geotagged tweets.”

In sum, the study finds that “the results of using the Streaming API depend strongly on the coverage and the type of analysis that the researcher wishes to perform. This leads to the next question concerning the estimation of how much data we actually get in a certain time period.” This is critical if researchers want to place their results into context and potentially apply statistical methods to account (and correct) for bias. The authors suggest that in some cases the Streaming API coverage can be estimated. In future research, they hope to “find methods to compensate for the biases in the Streaming API to provide a more accurate picture of Twitter activity to researchers.” In particularly they want to “determine whether the methodology presented here will yield similar results for Twitter data collected from other domains, such as natural, protest & elections.”

The authors will present their paper at this year’s International Conference on Weblogs and Social Media (ICWSM). So I look forward to meeting them there to discuss related research we are carrying out at QCRI.

See also:

The real questions are:

– Is anything new revealed here that responders didn’t already know regarding the truth on the ground;

– in a conflict, an environment in which the master narrative is under contestation, can we use algorithms to surface those alternate narratives rather than seeing them as errors; and,

– how do we address the crushed expectations of individual reporters when the provision of political freedoms at the individual level is not the goal (its a systems problem needing a systems solution)?

Pingback: Analysis of Multimedia Shared on Twitter After Tornado | iRevolution

Pingback: Twitter’s IPO Reveals Big Data Revenue Stream