GeoFeedia was not originally designed to support humanitarian operations. But last year’s blog post on the potential of GeoFeedia for crisis mapping caught the interest of CEO Phil Harris. So he kindly granted the Standby Volunteer Task Force (SBTF) free access to the platform. In return, we provided his team with feedback on what features (listed here) would make GeoFeedia more useful for digital disaster response. This was back in summer 2012. I recently learned that they’ve been quite busy since. Indeed, I had the distinct pleasure of sharing the stage with Phil and his team at this superb conference on social media for emergency management. After listening to their talk, I realized it was high time to publish an update on GeoFeedia, especially since we had used the tool just two months earlier in response to Typhoon Pablo, one of the worst disasters to hit the Philippines in the past 100 years.

The 1-minute video is well worth watching if you’re new to GeoFeedia. The plat-form enables hyper local searches for information by location across multiple social media channels such as Twitter, Youtube, Flickr, Picasa & now Instagram. One of my favorite GeoFeedia features is the awesome geofeed (digital fence), which you can learn more about here. So what’s new besides Instagram? Well, the first suggestion I made last year was to provide users with the option of searching by both location and topic, rather than just location alone. And presto, this now possible, which means that digital humanitarians today can zoom into a disaster-affected area and filter by social media type, date and hashtag. This makes the geofeed feature even more compelling for crisis response, especially since geofeeds can also be saved and shared.

The vast majority of social media monitoring tools out there first filter by key-word and hashtag. Only later do they add location. As Phil points out, this mean they easily miss 70% of hyper local social media reports. Most users and org-anizations, who pay hefty licensing fees to uses these platforms, are typically unaware of this. The fact that GeoFeedia first filters by location is not an accident. This recent study (PDF) of the 2012 London Olympics showed that social media users posted close to 170,000 geo-tagged to Twitter, Instagram, Flickr, Picasa and YouTube during the games. But only 31% of these geo-tagged posts contained any Olympic-specific keywords and/or hashtags! So they decided to analyze another large event and again found the number of results drop by about 70% when not first filtering by location. Phil argues that people in a crisis situation obviously don’t wait for keywords or hashtags to form; so he expects this drop to happen for disasters as well. “Traditional keyword and hashtag search thus be complemented with a geo-graphical search in order to provide a full picture of social media content that is contextually relevant to an event.”

One of my other main recommendations to Phil & team last year had to do with analytics. There is a strong need for an “Analytics function that produces summary statistics and trends analysis for a geofeed of interest. This is where Geofeedia could better capture temporal dynamics by including charts, graphs and simple time-series analysis to depict how events have been unfolding over the past hour vs 12 hours, 24 hours, etc.” Well sure enough, one of GeoFeedia’s major new features is a GeoAnalytics Dashboard; an interface that enables users to discover temporal trends and patterns in social media—and to do so by geofeed. This means a user can now draw a geofeed around a specific area of interest in a given disaster zone and search for pictures that capture major infrastructure damage on a specified date that contain tags or descriptions with the words “#earthquake”, “damage,” “buildings,” etc. As Phil rightly points out, this provides a “huge time advantage during a crisis to give a yet another filtered layer of intelligence; in effect, social media that is highly relevant and actionable ‘bubbling-up to the top’ of the pile.”

I truly am a huge fan of the GeoFeedia platform. Plus, Phil & team have been very responsive to our interests in using their tool for disaster response. So I’m ex-cited to see which features they build out next. They’ve already got a “data portability” functionality that enables data export. Users can also publish content from GeoFeedia directly to their own social networks. Moreover, the filtered content produced by geofeeds can also be shared with individual who do not have a GeoFeedia account. In any event, I hope the team will take into account two items from my earlier wish list—namely Sentiment Analysis and GeoAlerts.

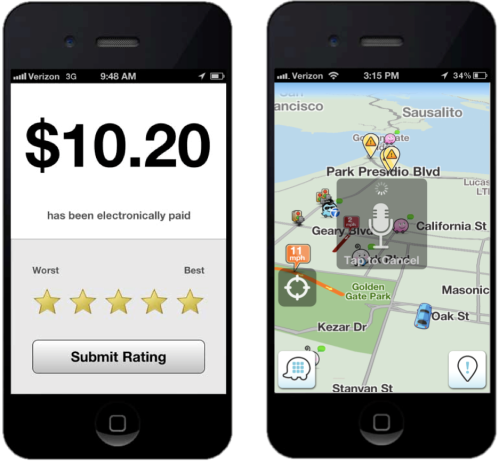

A Sentiment Analysis feature would capture the general mood and sentiment expressed hyper-locally within a defined geofeed in real-time. The automated Geo-Alerts feature would make the geofeed king. A GeoAlerts functionality would enable users to trigger specific actions based on different kinds of social media traffic within a given geofeed of interest. For example, I’d like to be notified if the number of pictures posted within my geofeed that are tagged with the words “#earthquake” and “damage,” increases by more than 20% in any given hour. Similarly, one could set a geofeed’s GeoAlert for a 10% increase in the number of tweets with the words “cholera” and “diarrhea” (these need not be in English, by the way) in any given 10-minute period. Users would then receive GeoAlerts via automated emails, Tweets and/or SMS’s. This feature would in effect make the GeoFeedia more of a mobile and “hands free” platform, like Waze for example.

My first blog post on GeoFeedia was entitled “GeoFeedia: Next Generation Crisis Mapping Technology?” The answer today is a definite “Yes!” While the platform was not originally designed with disaster response in mind, the team has since been adding important features that make the tool increasingly useful for humanitarian applications. And GeoFeedia has plans for more exciting develop-ments in 2013. Their commitment to innovation and strong continued interest in supporting digital disaster response is why I’m hoping to work more closely with them in the years to come. For example, our AIDR (Artificial Intelligence for Disaster Response) platform would really add a strong Machine Learning com-ponent to GeoFeedia’s search function, in effect enabling the tool to go beyond simple keyword search.